I enjoy being deceived sometimes. The magicians Penn and Teller do a great job of deceiving audiences. The website https://www.thispersondoesnotexist.com/ demonstrates a different kind of deception: computer-generated images that look incredibly realistic. This is different than the deepfake videos where one person's face is attached to another's body.

The method the site uses is called "Generative adversarial networks" or GANs for short. The concept is actually quite simple, even if the details are more complex. Before we get there we have to understand three things: discriminative neural networks, generative neural networks, and the training of neural networks. These are essential parts of "Artificial Intelligence".

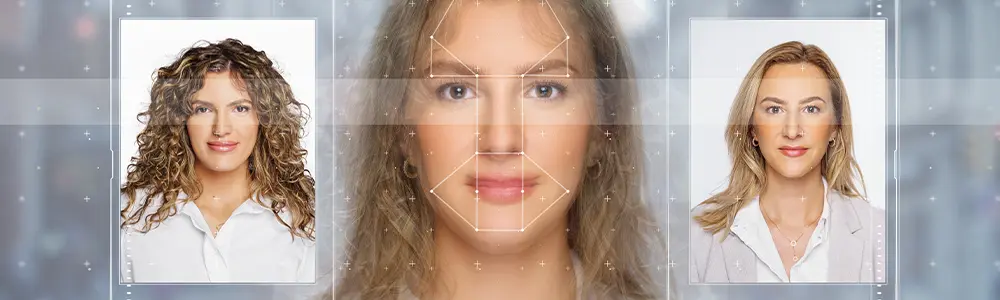

The site thispersondoesnotexist.com has examples of GAN-generated faces.

Discriminative neural networks are what we often think of when we think of applications of artificial intelligence. They are basically software that can process some input and classify it as "very probably" something or "probably not" something. For instance, it could look at a picture of an animal (a cow) and say with good assurance that it was not a horse (if it had been trained to do so - more on that later). Or it could look at my hand-written digits and decide which was a '7' and which was a '3' - again with the proper training.

Generative networks, on the other hand, are configured to take some input and try to produce some sort of output. One might take the printed digit '7' and try to produce something that looks like a handwritten 7.

How these networks do their work is called training. A discriminative network might be trained with thousands of photos of animals and told that those that had images of horses were indeed horses and the others were not. A discriminative network could be trained with thousands of photos of individuals and their names and then when presented with one of those individuals, be able to decide which one it is.

The training is a complex process and can take a long time, even with powerful computers.

The idea of the GAN then is to combine the generative and discriminative networks. The former generates what it thinks is a face (in this case) and the discriminative network says something along the lines of "that's not a very good face, try again" or "that looks very real". For that to work, the generative net needs to "learn" how to create faces and the discriminative network needs to understand what a real face looks like.

Here is a good explanation of the complex process including video examples: https://www.lyrn.ai/2018/12/26/a-style-based-generator-architecture-for-generative-adversarial-networks/. You can safely ignore a lot of the details and still get a very good understanding.

From Youtube

Are GANs Dangerous?

The images created by the networks we have discussed here are probably not too dangerous in and of themselves. They could be used in videos or elsewhere and not require model releases, for example, and the deception would be relatively harmless. But that is not universally the case.

An article from Nextgov describes how deceptive satellite images could be created using GANs and how those could be used by an adversary in a military context. There are significant private sector concerns as well. At the core of these issues is whether or not data can be trusted. If we see an image of a person, is she real or generated by AI? If we see a satellite image, is it real or generated by AI? People tend to believe w

hat they see - or think they see - and faked images can be even more convincing than a stage magician.